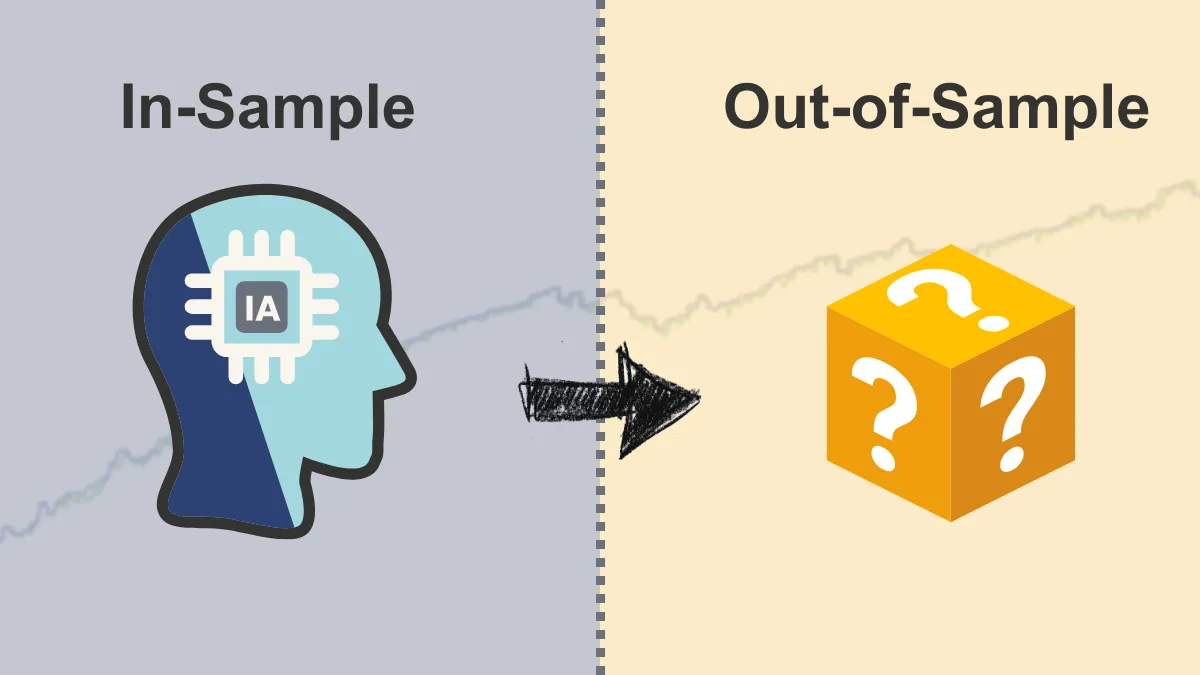

In-Sample Testing vs Out-of-Sample Testing: How to More Reliably Evaluate Your EA?

In the previous article, we discussed how to "optimize" your Expert Advisor (EA), which means adjusting parameter settings to make it perform better on past historical data.We also mentioned the need to be cautious of the "overfitting" trap, where the EA fits the past data too perfectly but may perform poorly in the future.

So, how do we know if the "best" parameter settings found through optimization truly learned the market's patterns or just "memorized" the past data?

This is where the concepts of In-Sample Testing and Out-of-Sample Testing become very important.

They help us more reliably evaluate the EA strategy.

What is In-Sample Testing?

Simply put:

In-Sample Testing refers to the segment of historical data you use during the optimization process.Like reviewing your textbook:

Imagine you are preparing for an exam by reviewing the highlighted key points in your textbook.The EA, during optimization, is "learning" from this in-sample data segment, finding the parameter settings that perform best within this data.

What is the purpose?

To find the parameter combination that allows the EA to perform best on this specific historical data segment.What are its limitations?

Good performance on in-sample data does not guarantee good future performance.Because the EA might have just "memorized" the unique patterns or noise in this data segment rather than learning truly generalizable rules.

This is the risk of overfitting.

What is Out-of-Sample Testing?

Simply put:

Out-of-Sample Testing uses a completely separate segment of historical data that was never used during the optimization process to test the "best" parameter settings found in the in-sample testing.Like taking a mock exam:

After reviewing the textbook (in-sample testing), you take a mock exam (out-of-sample data) that you have never seen before to test how well you have learned.Out-of-Sample Testing lets your EA run with the optimized parameters on a segment of historical data it has "never seen" before.

What is the purpose?

To see if the "best" parameter set still performs well when facing new, unknown historical data.This helps determine whether the EA has truly learned effective skills or just "passed" the in-sample "exam".

How does it help you?

- If the EA still performs reasonably well on out-of-sample data (maybe not as perfect as in-sample but acceptable), you can be more confident that the strategy is likely more reliable and not severely overfitted.

- If the EA performs poorly on out-of-sample data (for example, turning from profitable to losing), this is a strong warning sign! It likely indicates your EA is severely overfitted, and the previously found "best" parameters are unreliable.

Why is this important? (Addressing your concerns)

- Reduce fear of losses: Out-of-Sample Testing provides a test closer to "real trading". If the strategy performs poorly in out-of-sample testing, it warns you before risking real money. Understanding the strategy's true potential risks helps manage expectations and reduce fear of future losses.

- Combat overfitting traps: This is one of the most direct and effective ways to avoid overfitting. Many people are easily misled by perfect in-sample backtest reports after optimization, but out-of-sample testing helps you expose this "illusion".

- Build more realistic confidence: Only when the EA performs reasonably on both in-sample and out-of-sample data can you build more realistic confidence in the strategy, rather than false confidence caused by overfitting.

How to perform these two tests? (Simple concept)

The usual approach is to split your historical data into two (or more) segments:- In-Sample: Use this segment for optimization to find the best parameters.

- Out-of-Sample: "Hide" this segment completely from the optimization process. After optimization is complete, run a normal backtest on this segment using the best parameters found to see the results.

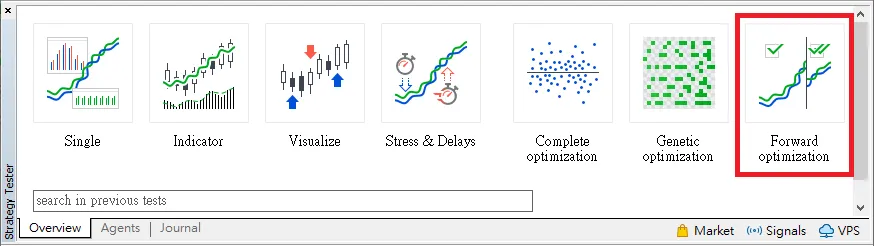

Some trading platforms (such as MT5) provide a "Forward Testing" feature in their strategy tester, which can help automate this data splitting and testing process.

Summary: Key Steps to Validate Optimization Results

Optimizing EA parameters may make the strategy look better, but validation is necessary.- In-Sample Testing helps you find "potential" parameters.

- Out-of-Sample Testing helps you verify if these parameters are truly "reliable".

By passing these two tests, you can gain deeper insight into the robustness of your EA strategy, effectively reduce the risk of overfitting, and make wiser trading decisions.

Final reminder: Even if an EA performs well in both in-sample and out-of-sample tests, this is still based on past data.

Before investing real money, the most important final step is always to conduct live testing in a "Demo Account".

Let the EA run in the current market environment for a period of time and observe its actual performance—this is the ultimate test.

Hi, we are the Mr.Forex Research Team

Trading requires not just the right mindset, but also useful tools and insights. We focus on global broker reviews, trading system setups (MT4 / MT5, EA, VPS), and practical forex basics. We personally teach you to master the "operating manual" of financial markets, building a professional trading environment from scratch.

If you want to move from theory to practice:

1. Help share this article to let more traders see the truth.

2. Read more articles related to Forex Education.

Trading requires not just the right mindset, but also useful tools and insights. We focus on global broker reviews, trading system setups (MT4 / MT5, EA, VPS), and practical forex basics. We personally teach you to master the "operating manual" of financial markets, building a professional trading environment from scratch.

If you want to move from theory to practice:

1. Help share this article to let more traders see the truth.

2. Read more articles related to Forex Education.